Review Don't Do

Streamlining the user experience with speed to benefit for returning customers

Should returning users be subject to the same onboarding process as new users?

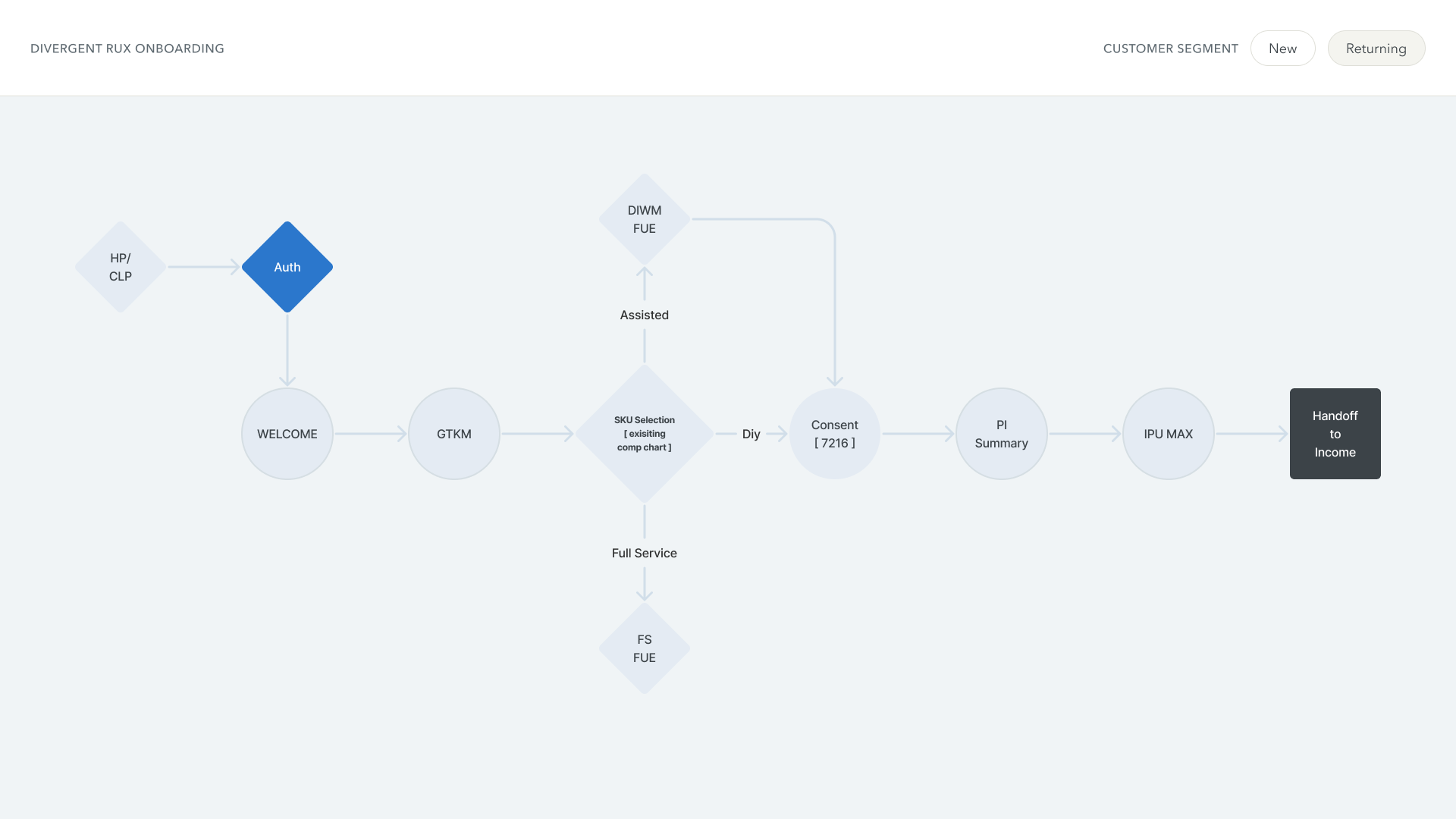

The Review Don’t Do project streamlined the onboarding for returning DIY and DIWM users by personalizing and speeding up the process, enhancing user experience by eliminating redundant steps and offering customized product suggestions.

Scope

- Strategy

- User Research

- UX

Measured success

- Improved A2I by a 103 I2C

Elevating Onboarding for Returning Users

The challenge

Returning users to the platform faced a daunting task: navigating through an onboarding process designed for new users. This not only prolonged their journey but also diluted the personalized experience they expected. Analysis revealed a critical opportunity for improvement: streamline the onboarding process to reduce user fatigue and enhance personalization, ensuring a seamless and efficient onboarding process for returning users that is crucial for retention, reducing abandonment, and ultimately increasing completion rates.

Research and Insights

The journey to streamline an onboarding experience for returning users began with a deep dive into user research, conducted in two comprehensive rounds of rapid prototyping. This research was pivotal in shaping the project, ensuring that the solutions were grounded in real user needs and behaviors.

Long and Tedious Flow

Subjecting both new and returning users to an identical process can lead to user fatigue and increased drop-offs, undermining the overall user experience and retention. This approach overlooks the opportunity to tailor experiences according to user familiarity and needs, causing frustration among returning users who expect efficiency and speed. Consequently, failing to differentiate these processes may result in missed opportunities for enhancing user satisfaction, engagement, and loyalty, ultimately impacting the platform’s growth and success.

Key findings

- Returning customers expressed frustration with repetitive questions and unclear product recommendations, which led to confusion and diminished trust in TurboTax.

- There was a strong desire for a system that could recognize life changes and suggest personalized product choices based on these changes.

- Customers valued efficiency and personalization, seeking a streamlined process that would remember their information year-to-year and minimize the time spent onboarding.

Project Outcome

Results and Impact

This project marked a significant leap forward in the quest to personalize and streamline a users experience. Early metrics indicate a substantial improvement in onboarding efficiency, with notable increases in A2I (Auth to Income) and A2C (Auth to Complete) rates among returning users. User feedback has been overwhelmingly positive, highlighting the reduction in effort and enhanced personalization as key benefits.

Insightful Learnings

This project underscored the importance of personalization and efficiency in the user onboarding experience. One key learning was the critical role of data accuracy and machine learning models in personalizing the user journey. As we move forward, our team is focused on refining the ML models and exploring additional avenues for personalization, further reducing onboarding friction and enhancing user satisfaction.

The success of Review Don’t Do is a testament to the dedication and collaboration of our team

Special thanks to Sai Manohar Nethi for navigating through complex edge cases, and to JP Phousirith, Joey Hu, Cole Bickford, Norman Bell, and Erik Wirtz for their relentless testing efforts. Appreciation also goes to Jing Yuan, Shankar, @tkang1, and @jhsu3 for their instrumental role in developing the RedOn Model, and to Prageesh Gopakumar, Patrick Tsui, Danh Dang, and Will Jang for their invaluable PD support. This project was a collective effort, and its success reflects the hard work and innovation of the entire team.